|

Pouya Pezeshkpour,

Liyan Chen,

Sameer Singh |

|

Knowledge bases (KB) are an essential part of many computational systems with applications in search, structured data management, recommendations, question answering, and information retrieval. However, KBs often suffer from incompleteness, noise in their entries, and inefficient inference under uncertainty. To address these issues learning relational KBs by representing entities and relations in an embedding space has been a focus of active research. Nevertheless, Knowledge bases in the real-world, contain a wide variety of data types such as text, images, and numerical values which are being ignored by current methodology. We propose multimodal knowledge base embeddings (MKBE) that use different neural encoders for this variety of observed data, and combine them with existing relational models to learn embeddings of the entities and multimodal data. Further, using these learned embedings and different neural decoders, we introduce a novel multimodal imputation model to generate missing multimodal values, like text and images, from information in the knowledge base. |

|

Method |

|

|

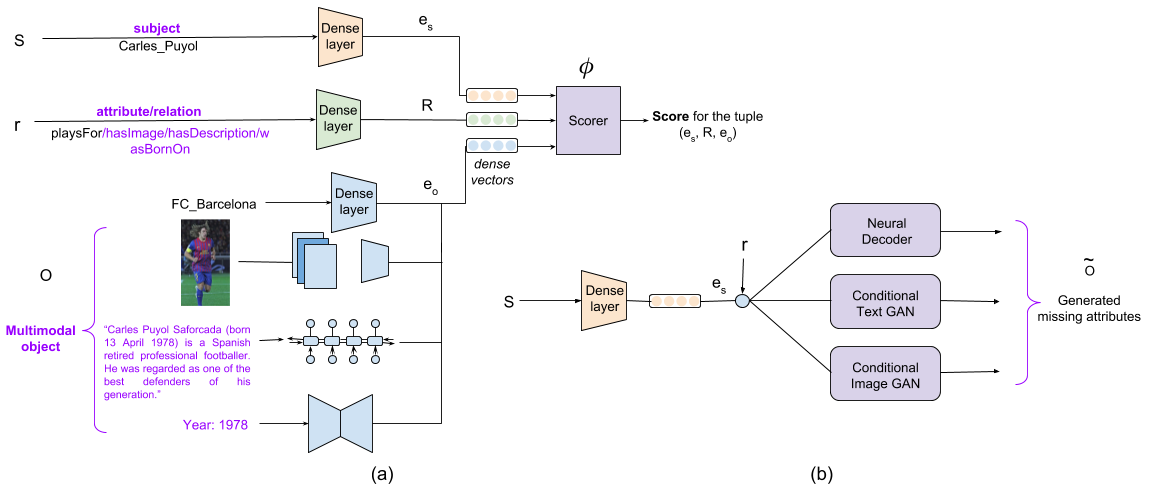

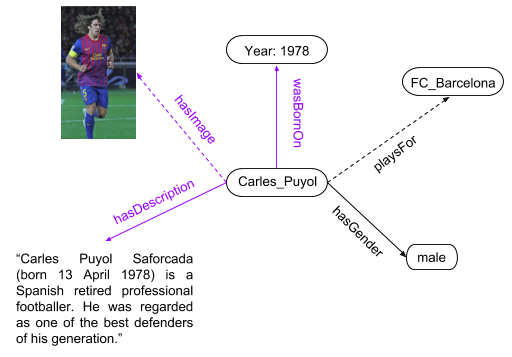

To incorporate such multimodal objects into the existing relational models like DistMult and ConvE, we propose to learn embeddings for these types of data as well. We utilize recent advances in deep learning to construct encoders for these objects to represent them, essentially providing an embedding for any object value. The overall goal remains the same: the model needs to utilize all the observed subjects, objects, and relations, across different data types, in order to estimate whether any fact holds. We present an example of an instantiation of MKBE for a knowledge base containing YAGO entities at the beginning of this overview. For any triple, we embed the subject (Carles Puyol) and the relation (such as playsFor, wasBornOn, or playsFor) using a direct lookup. For the object, depending on the domain (indexed, string, numerical, or image, respectively), we use appropriate encoders to compute its embedding. Via these neural encoders, the model can use the information content of multimodal objects to predict missing links, however, learning embeddings for objects in M (multimodal attributes) is not sufficient to generate missing multimodal values, i.e. < s, r, ? > where the object is in M. Consequently, we introduce a set of neural decoders that use entity embeddings to generate multimodal values. An outline of our model for imputing missing values is depicted in the above figure. |

|

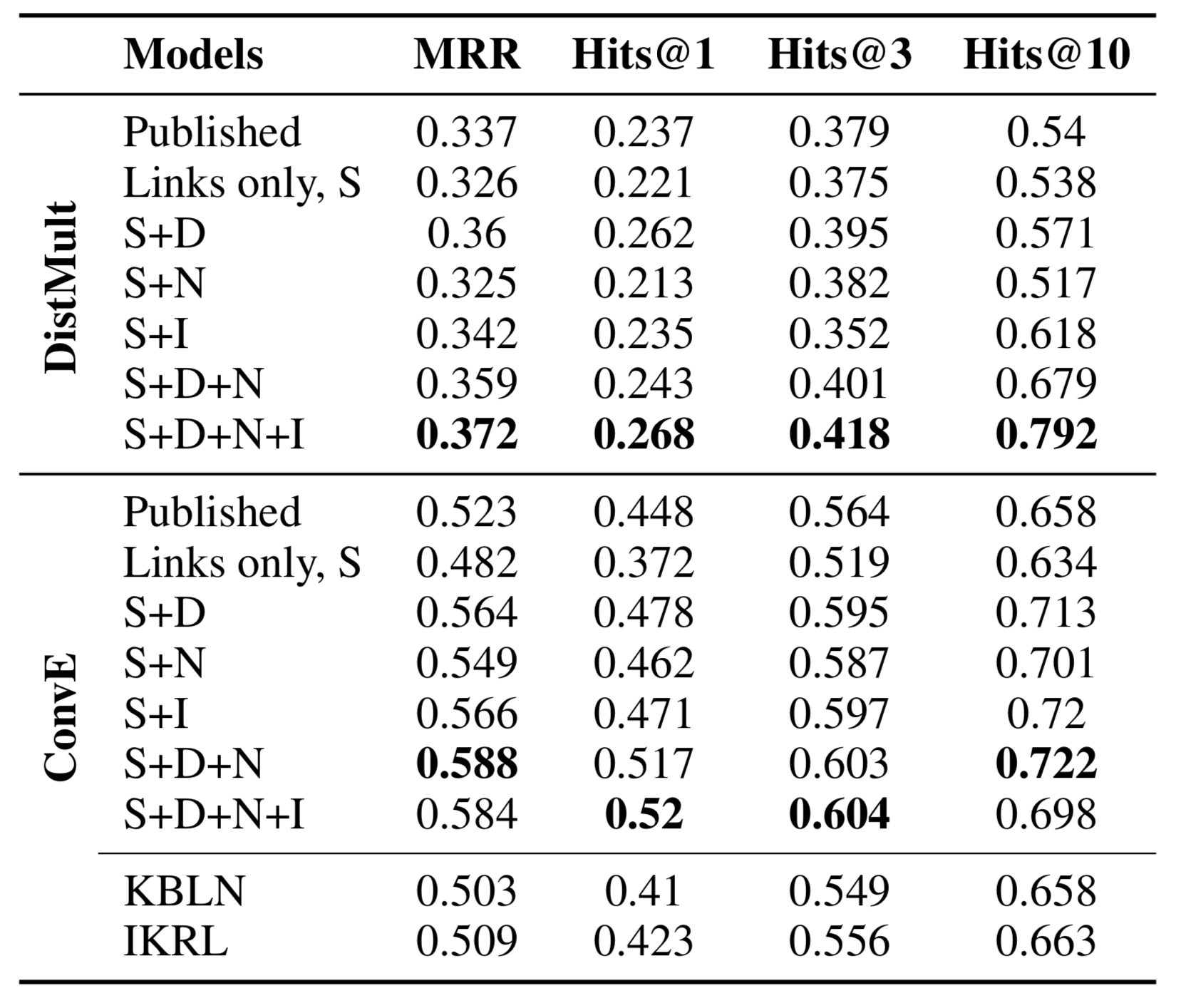

Link PredictionWe evaluate the capability of MKBE in the link prediction task. The goal is to calculate MRR and Hits@ metric (ranking evaluations) of recovering the missing entities from triples in the test dataset, performed by ranking all the entities and computing the rank of the correct entity. Similar to previous work, here we focus on providing the results in a filtered setting, that is we only rank triples in the test data against the ones that never appear in either train or test datasets. The result of link prediction task on the extended YAGO-10 (we enrich YAGO-10 by adding numerical, text and images into dataset) is provided in the table. |

|

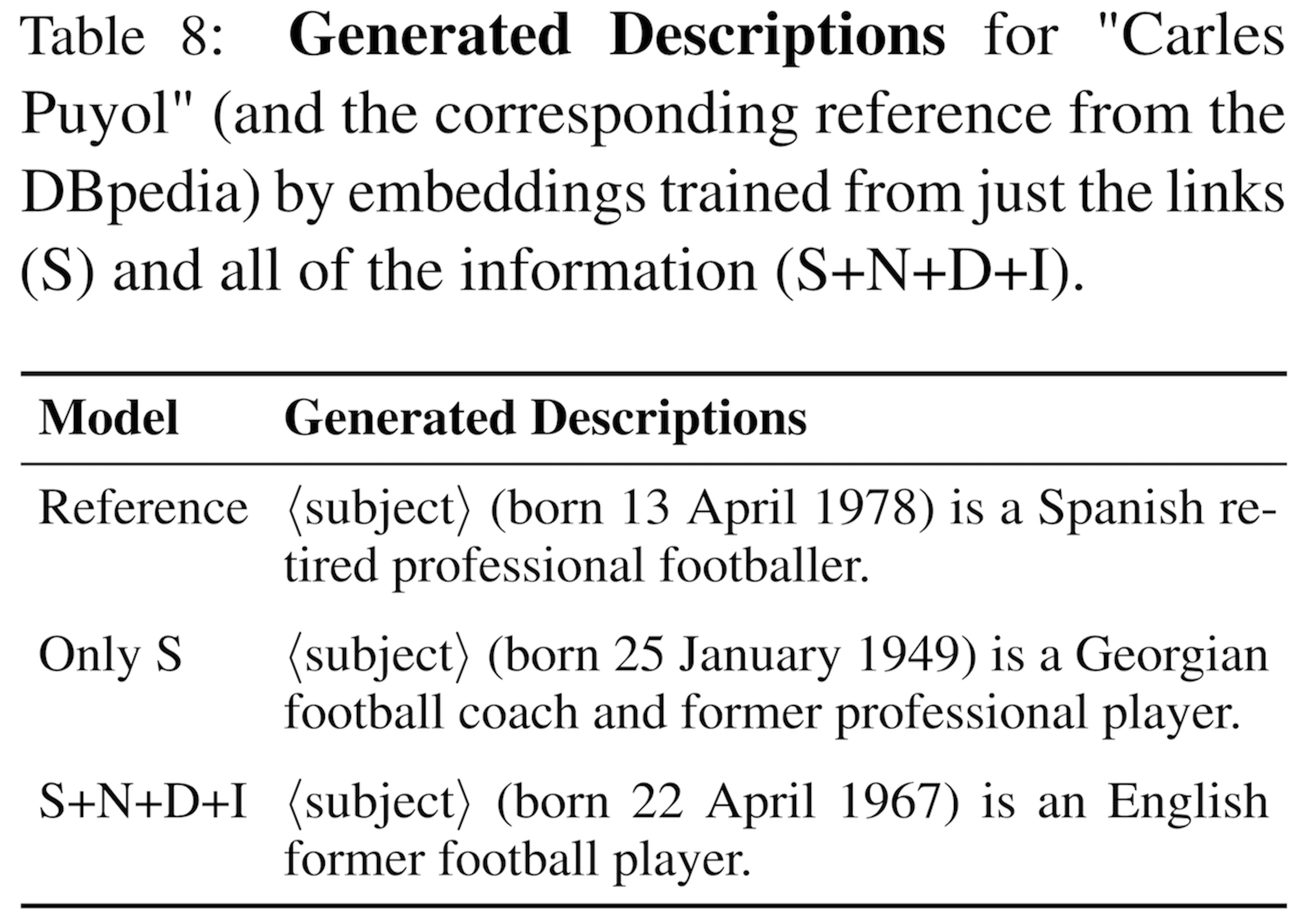

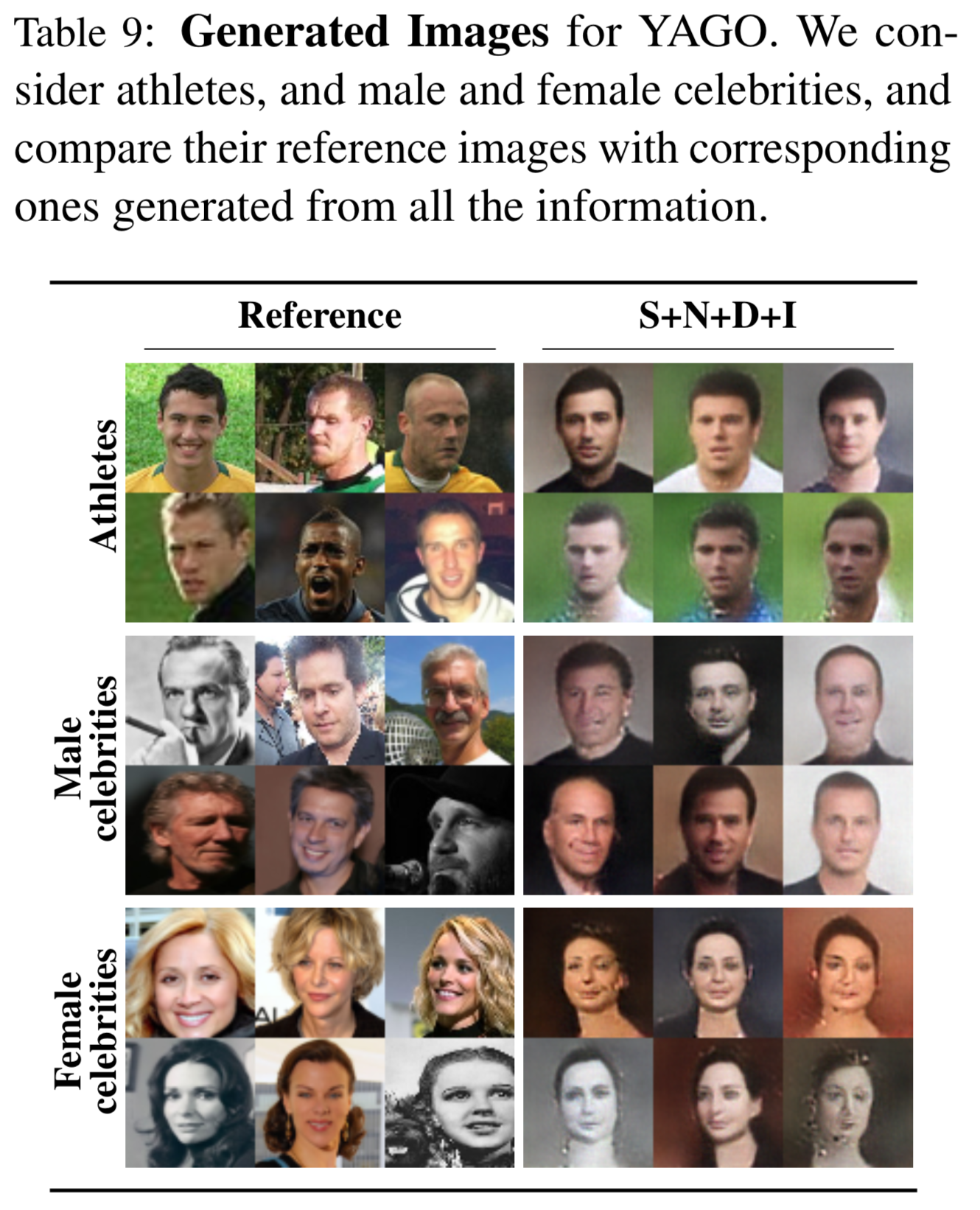

Imputing Multimodal AttributesHere we describe the decoders we use to generate multimodal values for entities from their embeddings. The multimodal imputing model is shown on the right side of our network figure, which uses different neural decoders to generate missing attributes (more details are provided in supplementary materials). To recover the missing numerical and categorical data such as dates, gender, and occupation, we use a simple feed-forward network on the entity embedding to predict the missing attributes. These decoders are trained with embeddings from encoders part, with appropriate losses (RMSE for numerical and cross-entropy for categories). Along the same line, for generating grammatical and linguistically coherent sentences and meaningful images we use generative adversarial networks condition on entity embedding from the previous section. Some generated samples is presented below. | |

|

|

YAGO DemoHere we study generating missing attributes from knowleddge graph representation. Accordingly, we consider an imagineray entity with only two relations, the occupation and gender. Then using learned embeddings of graph, we calculate the embedding of this entity and using this embedding as conditional information we generate a discription and an imager for the entity. Please select the links: | |

|

subject (born 4 August 1986) is a Polish footballer who plays for GKS Academical.

|

MovieLens DemoSimilar to previous section, here we consider an imagineray movie with only two relations, the genre and release date. Then using learned embeddings of graph, we calculate the embedding of this entity and using this embedding as conditional information we generate a title for this movie. Please select the links: | |

|

Double Game

|

|

ConclusionMotivated by the need to utilize multiple sources of information, such as text and images, to achieve more accurate link prediction, we present a novel neural approach to multimodal relational learning. We introduce MKBE, a link prediction model that consists of (1)~a compositional encoding component to jointly learn the entity and multimodal embeddings to encode the information available for each entity, and (2)~adversarially trained decoding component that use these entity embeddings to impute missing multimodal values. We enrich two existing datasets, YAGO-10 and MovieLens-100k, with multimodal information to introduce benchmarks. We show that MKBE, in comparison to existing link predictors DistMult and ConvE, can achieve higher accuracy on link prediction by utilizing the multimodal evidence. Further, we show that MKBE effectively incorporates relational information to generate high-quality multimodal attributes like images and text. |

|